Overview

We provide simple examples for use of the gsdmvn package for deriving fixed and group sequential designs under non-proportional hazards. The piecewise model for enrollment, failure rates, dropout rates and changing hazard ratio over time allow great flexibility in design assumptions. Users are encouraged to suggest features that would be of immediate and long-term interest to add.

Topics included here are:

- Packages required and how they are used.

- Specifying enrollment rates.

- Specifying failure and dropout rates with possibly changing hazard ratio over time.

- Deriving a fixed design with no interim analysis.

- Simple boundary specification for group sequential design.

- Deriving a group sequential design under non-proportional hazards.

- Displaying design properties.

- Design properties under alternate assumptions.

- Differences from gsDesign.

- Future enhancement priorities.

All of these items are discussed briefly to enable a quick start for early adopters while also suggesting the ultimate possibilities that the software enables. Finally, while the final section provides current enhancement priorities, potential topic-related enhancements are discussed throughout the document.

Packages Used

- The gsdmvn package is used here to implement group sequential distribution theory under non-proportional hazards and to derive a wide variety of boundary types for group sequential designs.

- The gsDesign package is used as a check for results under proportional hazards as well as a source from deriving bounds using spending functions.

- The gsDesign2 package provides computations to compute expected event accumulation and average hazard ratio over time; these are key inputs to the group sequential distribution parameters.

- The simtrial package is used to verify design properties using simulation.

The gsdmvn package will likely will likely be incorporated eventually into the gsDesign2 package, resulting in a fully featured design package. However, features and implementation in gsdmvn will be allowed to change as needed during the agile rapid development phase.

Enrollment Rates

Piecewise constant enrollment rates are input in a tabular format. Here we assume enrollment will ramp-up with 25%, 50%, and 75% of the final enrollment rate for 2 months each followed by a steady state 100% enrollment for another 6 months. The rates will be increased later to power the design appropriately. However, the fixed enrollment rate periods will remain unchanged.

Failure and Dropout Rates

Constant failure and dropout rates are specified by study period and stratum; we consider a single stratum here. A hazard ratio is provided for treatment/control hazard rate for each period and stratum. The dropout rate for each period is assumed the same for each treatment group; this restriction could be eliminated in a future version, if needed. Generally, we take advantage of the identity for an exponential distribution with median \(m\), the corresponding failure rate \(\lambda\) is

\[\lambda = \log(2) / m.\]

We consider a control group exponential time-to-event with a 12 month median. We assume a hazard ratio of 1 for 4 months, followed by a hazard ratio of 0.6 thereafter. Finally, we assume a low 0.001 exponential dropout rate for both treatment groups.

medianSurv <- 12

failRates <- tibble::tibble(

Stratum = "All",

duration = c(4, Inf),

failRate = log(2) / medianSurv,

hr = c(1, .6),

dropoutRate = .001

)

failRates

#> # A tibble: 2 × 5

#> Stratum duration failRate hr dropoutRate

#> <chr> <dbl> <dbl> <dbl> <dbl>

#> 1 All 4 0.0578 1 0.001

#> 2 All Inf 0.0578 0.6 0.001Fixed Design

Under the above enrollment, failure and dropout rate assumptions we now derive sample size for a trial targeted to complete in 36 months with no interim analysis, 90% power and 2.5% Type I error. The parameter upar = qnorm(1 - .025) in this case is the upper bound for the single analysis, while the parameter beta = 0.1 is the Type II error (1 - power). The information fraction IF = 1 at the final analysis is just a statement that the analysis is done with 100% of the design planned endpoints. Finally, lpar = -Inf just means there is no futility bound for the design. The design can be derived under these assumptions.

# Type I error

alpha <- .025

design <-

gs_design_ahr(

enrollRates = enrollRates,

failRates = failRates,

alpha = alpha,

beta = .1, # Type II error = 1 - power

analysisTimes = 36, # Planned trial duration

IF = 1, # Single analysis at information-fraction of 1

upar = qnorm(1 - alpha), # Final analysis bound

lpar = -Inf # No futility bound

)There are three components to the resulting design.

names(design)

#> [1] "enrollRates" "failRates" "bounds"First, the enrollment rates for each period have been increased proportionately to size the trial for the desired properties; the duration for each enrollment rate has not changed.

| Stratum | duration | rate |

|---|---|---|

| All | 2 | 12.04701 |

| All | 2 | 24.09401 |

| All | 2 | 36.14102 |

| All | 6 | 48.18802 |

The output failRates are the same as input. The output bounds are just an upper bound of \(\Phi^{-1}(1-\alpha)\) and a lower bound of \(-\infty\). The targeted time of the single analysis is in the Time column. The average hazard ratio used to compute the sample size based on the Schoenfeld (1981) approximation is in AHR. The targeted events at the analysis is in the column Events. The targeted time of the analysis is in Time. The power is in the Probability column on the row with the Upper bound. The parameter theta is the natural parameter for the effect size as outlined in Jennison and Turnbull (2000) and elsewhere in this package; in this case it is -log(AHR). The variables info and info0 are statistical information at each analysis under the alternate and null hypothesis, respectively.

design$bounds

#> # A tibble: 1 × 11

#> Analysis Bound Time N Events Z Probability AHR theta info info0

#> <dbl> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 1 Upper 36 434. 315. 1.96 0.9 0.693 0.366 77.7 78.8We note that the targeted Events are approximately what would be proposed with the Schoenfeld (1981) formula:

gsDesign::nEvents(hr = design$bounds$AHR)

#> [1] 313.535The difference is that gs_design_ahr() accounts for both a null and alternate hypothesis variance estimate for theta=log(AHR) at each analysis to yield a slightly more conservative event target due to slower accumulation of statistical information under the alternate hypothesis. See Lachin (2009) for this approach for fixed design; to our knowledge, this has not previously been extended to group sequential design. Due to simplicity, there may be reasons to allow approaches with a single variance estimate in future releases.

Finally, we note that with a shorter trial duration of 30 months, we need both a larger sample size and targeted number of events due to a larger expected AHR at the time of analysis:

gs_design_ahr(

enrollRates = enrollRates,

failRates = failRates,

alpha = alpha,

beta = .1, # Type II error = 1 - power

analysisTimes = 30, # Planned trial duration

IF = 1, # single analysis at information-fraction of 1

upar = qnorm(1 - alpha), # Final analysis bound

lpar = -Inf # No futility bound

)$bounds %>% kable(digits = c(0, 0, 1, 0, 0, 3, 4, 3, 3, 2, 2))| Analysis | Bound | Time | N | Events | Z | Probability | AHR | theta | info | info0 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Upper | 30 | 535 | 348 | 1.96 | 0.9 | 0.705 | 0.349 | 85.35 | 86.88 |

Group Sequential Design

We will not go into detail for group sequential designs here. In brief, however, a sequence of tests \(Z_1, Z_2,\ldots, Z_K\) that follow a multivariate normal distribution are peformed to test if a new treatment is better than control Jennison and Turnbull (2000). We assume \(Z_k>0\) is favorable for the experimental treatment. Generally Type I error for this set of tests will be controlled under the null hypothesis of no treatment difference by a sequence of bounds \(b_1, b_2,\ldots,b_K\) such that for a chosen Type I error \(\alpha > 0\) we have

\[\alpha = 1 - P_0(\cap_{k=1}^K Z_k < b_k)\] Where \(P_0()\) refers to a probability under the null hypothesis. This is referred to as a non-binding bound since it is assumed the trial will not be stopped early for futility if some \(Z_k\) is small.

Simple Efficacy Bound Definition

Lan and DeMets (1983) developed the spending function method for deriving group sequential bounds. This involves use of a non-decreasing spending function \(f(t)\) for \(t\ge 0\) where \(f(0)=0\) and \(f(t)=\alpha\) for \(t \ge 1\). Suppose for \(K>0\) analyses are performed when proportion \(t_1< t_2 <\ldots t_K=1\) of some planned statistical information (e.g., proportion of planned events for a time-to-event endpoint trial for proportion of observations for a binomial or normal endpoint). Bounds through the first \(k\) analyses \(1\le k\le K\) are recursively defined by the spending function and the multivariate normal distribution to satisfy

\[f(t_k) = 1 - P_0(\cap_{j=1}^k Z_j < b_j).\] For this quick start, we will only illustrate this type of efficacy bound.

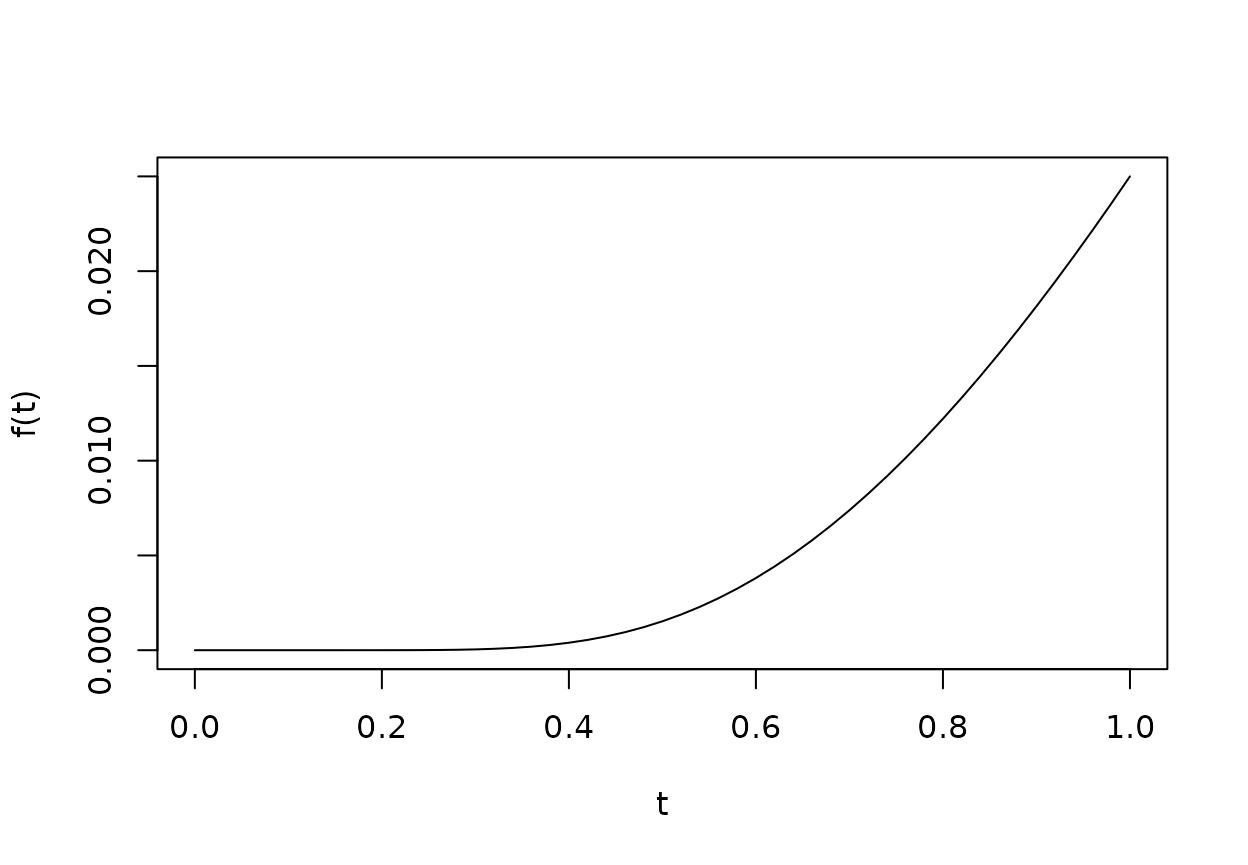

Perhaps the most common spending function for this approach is the Lan and DeMets (1983) approximation to the O’Brien-Fleming bound with

\[f(t) = 2-2\Phi\left(\frac{\Phi^{-1}(1-\alpha/2)}{t^{1/2}}\right).\]

Suppose \(K=3\) and \(t_1=0.5\), \(t_2 = 0.75\), \(t_3 = 1\). We can define bounds with the gsDesign group sequential design function gsDesign() and Lan-DeMets O’Brien-Fleming spending function for \(\alpha = 0.025\).

b <- gsDesign::gsDesign(k = 3, timing = c(0.5, 0.75, 1), test.type = 1, alpha = 0.025, sfu = gsDesign::sfLDOF)$upper$bound

b

#> [1] 2.962588 2.359018 2.014084Now we can define a one-sided group sequential design under the same enrollment, failure and dropout assumptions used previously.

design1s <- gs_design_ahr(

enrollRates = enrollRates,

failRates = failRates,

analysisTimes = 36, # Trial duration

upper = gs_spending_bound,

upar = list(sf = gsDesign::sfLDOF, total_spend = 0.025),

lower = gs_b,

lpar = rep(-Inf, 3), # No futility bound

IF = c(.5, .75, 1)

)Bounds at the 3 analyses are as follows; note that expected sample size at time of each data cutoff for analysis is also here in N. We filter on the upper bound so that lower bounds with Z = -Inf are not shown.

| Analysis | Bound | Time | N | Events | Z | Probability | AHR | theta | info | info0 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Upper | 16.3 | 451 | 164 | 2.963 | 0.0594 | 0.801 | 0.221 | 40.14 | 40.97 |

| 2 | Upper | 23.9 | 451 | 246 | 2.359 | 0.5425 | 0.728 | 0.318 | 60.14 | 61.45 |

| 3 | Upper | 36.0 | 451 | 328 | 2.014 | 0.9000 | 0.693 | 0.366 | 80.82 | 81.94 |

The boundary crossing probabilities column labeled Probability here are under the alternate hypothesis. These are cumulative probabilities totaling 0.9 at the final analysis, representing 90% power. If we wish to see the boundary probabilities under the null hypothesis, we can change the hazard ratio to 1 in the input failRates, use the output enrollRates from designs as follows.

gs_power_ahr(

enrollRates = design1s$enrollRates,

failRates = design1s$failRates %>% mutate(hr = 1),

upper = gs_spending_bound,

upar = list(sf = gsDesign::sfLDOF, total_spend = 0.025),

lpar = rep(-Inf, 3), # No futility bound

events = design1s$bound$Events[1:3]

) %>%

filter(Bound == "Upper") %>%

kable(digits = c(0, 0, 1, 0, 0, 3, 4, 3, 3, 2, 2))| Analysis | Bound | Time | Events | Z | Probability | AHR | theta | info | info0 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Upper | 15.3 | 164 | 3 | 0.002 | 1 | 0 | 40.969 | 40.97 |

| 2 | Upper | 21.2 | 246 | 2 | 0.010 | 1 | 0 | 61.454 | 61.45 |

| 3 | Upper | 30.4 | 328 | 2 | 0.025 | 1 | 0 | 81.939 | 81.94 |

Two-Sided Testing

We will consider both symmetric and asymmetric 2-sided designs.

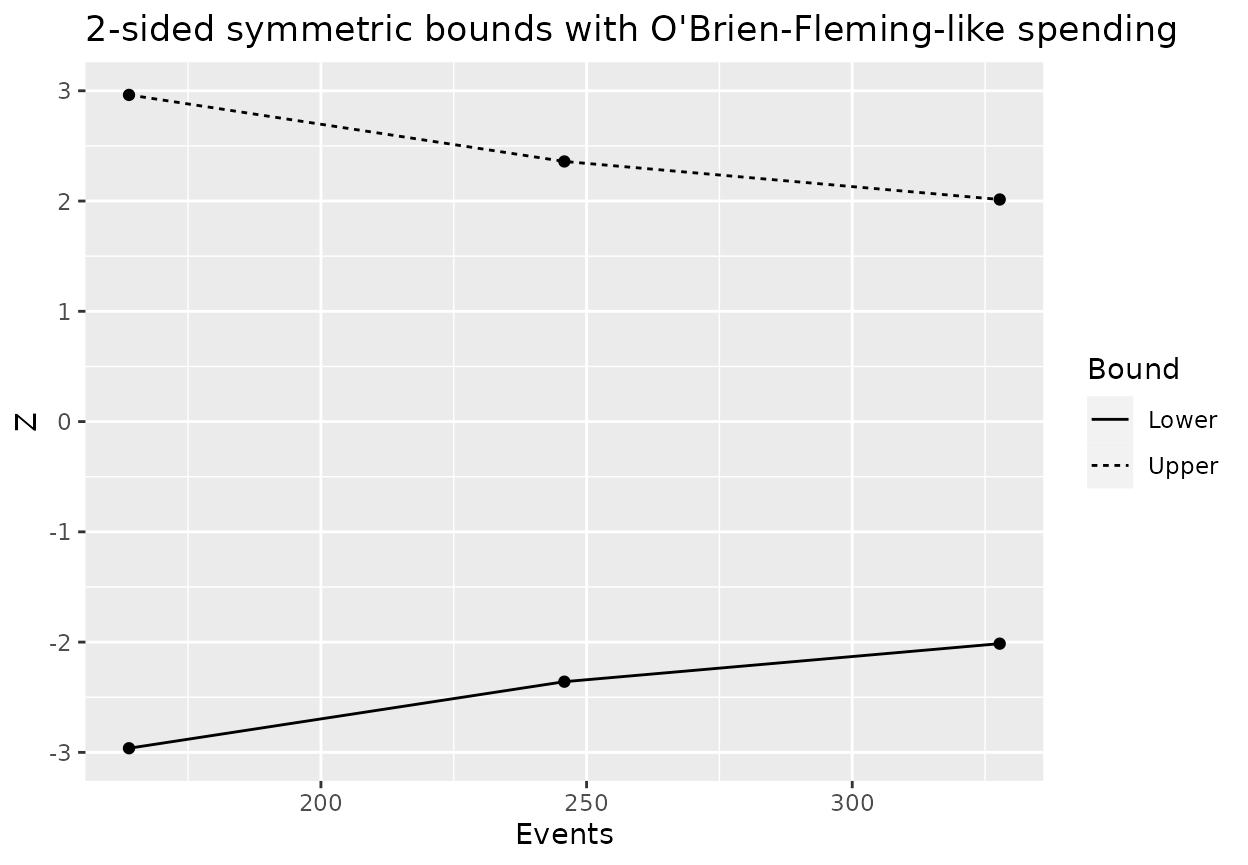

Symmetric 2-sided bounds

For a symmetric design, we can again define a bound using gsDesign::gsDesign(). For this example, the bound is identical to b above to the digits calculated.

b2 <- gsDesign::gsDesign(test.type = 2, sfu = sfLDOF, alpha = 0.025, timing = c(.5, .75, 1))$upper$bound

b2

#> [1] 2.962588 2.359018 2.014084Now we replicate with gs_design_ahr():

design2ss <- gs_design_ahr(

enrollRates = enrollRates,

failRates = failRates,

analysisTimes = 36, # Trial duration

IF = c(.5, .75, 1), # Information fraction at analyses

upper = gs_spending_bound,

upar = list(sf = gsDesign::sfLDOF, total_spend = 0.025),

lower = gs_spending_bound,

lpar = list(sf = gsDesign::sfLDOF, total_spend = 0.025),

h1_spending = FALSE

)Design bounds are confirmed with:

| Analysis | Bound | Time | N | Events | Z | Probability | AHR | theta | info | info0 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Upper | 16.3 | 451 | 164 | 2.963 | 0.0594 | 0.801 | 0.221 | 40.14 | 40.97 |

| 2 | Upper | 23.9 | 451 | 246 | 2.359 | 0.5425 | 0.728 | 0.318 | 60.14 | 61.45 |

| 3 | Upper | 36.0 | 451 | 328 | 2.014 | 0.9000 | 0.693 | 0.366 | 80.82 | 81.94 |

| 1 | Lower | 16.3 | 451 | 164 | -2.963 | 0.0000 | 0.801 | 0.221 | 40.14 | 40.97 |

| 2 | Lower | 23.9 | 451 | 246 | -2.359 | 0.0000 | 0.728 | 0.318 | 60.14 | 61.45 |

| 3 | Lower | 36.0 | 451 | 328 | -2.014 | 0.0000 | 0.693 | 0.366 | 80.82 | 81.94 |

The bounds can be plotted easily:

ggplot(data = design2ss$bound, aes(x = Events, y = Z, group = Bound)) +

geom_line(aes(linetype = Bound)) +

geom_point() +

ggtitle("2-sided symmetric bounds with O'Brien-Fleming-like spending")

Asymmetric 2-sided bounds

Asymmetric 2-sided designs are more common than symmetric since the objectives of the two bounds tend to be different. There is often caution to analyze early for efficacy or to use other than a conservative bound; both of these principles have been used with the example designs so far. Stopping when there is a lack of benefit for experimental treatment over control or for an overt indication of an unfavorable trend generally might be examined early and bounds be less stringent. We will add an early futility analysis where if there is a nominal 1-sided p-value of 0.05 in the wrong direction (\(Z=\Phi^{-1}(0.05)\) after 30% or 50% of events have accrued. This might be considered a disaster check. After this point in time, there may not be a perceived need for further futility analysis. For efficacy, we add an infinite bound at this first interim analysis.

b2sa <- c(Inf, b) # Same efficacy bound as before

a2sa <- c(rep(qnorm(.05), 2), rep(-Inf, 2)) # Single futility analysis bound

design2sa <- gs_design_nph(

enrollRates = enrollRates,

failRates = failRates,

analysisTimes = 36, # Trial duration

upper = gs_b, upar = b2sa,

lower = gs_b, lpar = a2sa, # Asymmetric 2-sided bound

IF = c(.3, .5, .75, 1)

)We now have a slightly larger sample size to account for the possibility of an early futility stop.

design2sa$enrollRates %>% summarise(N = ceiling(sum(rate * duration)))

#> # A tibble: 1 × 1

#> N

#> <dbl>

#> 1 459Bounds are now:

| Analysis | Bound | Z | Probability | theta | info | Time | AHR | Events | info0 | N |

|---|---|---|---|---|---|---|---|---|---|---|

| 2 | Upper | 2.96 | 0.0609 | 0.22 | 40.8 | 16.30 | 0.8 | 166.7 | 41.7 | 459 |

| 3 | Upper | 2.36 | 0.5506 | 0.32 | 61.2 | 23.89 | 0.7 | 250.1 | 62.5 | 459 |

| 4 | Upper | 2.01 | 0.9000 | 0.37 | 82.2 | 36.00 | 0.7 | 333.4 | 83.4 | 459 |

| 1 | Lower | -1.64 | 0.0111 | 0.13 | 24.7 | 12.04 | 0.9 | 100.0 | 25.0 | 459 |

| 2 | Lower | -1.64 | 0.0114 | 0.22 | 40.8 | 16.30 | 0.8 | 166.7 | 41.7 | 459 |

We see that there does not need to be a (finite) stopping rule for each bound at each analysis. That is, there is a futility bound only at the first 2 analyses and an efficacy bound only for the last 3 analyses. We still have the targeted power. The efficacy bound has not changed from our first design. The reason for not changing it is to address regulator concerns that such bounds are not always stopped for. However, if the bound is obeyed, the Type I error can be seen to be slightly reduced as follows. This price is generally acceptable for regulatory acceptance and operational flexibility that is enabled by controlling Type I error even if the futility bound is not obeyed.

events <- (design2sa$bounds %>% filter(Bound == "Upper"))$Events

gs_power_nph(

enrollRates = design1s$enrollRates,

failRates = design2sa$failRates %>% mutate(hr = 1),

upar = b2sa,

lpar = a2sa,

events = events,

maxEvents = max(events)

) %>%

# filter eliminates bounds that are infinite

filter(abs(Z) < Inf) %>% kable(digits = c(0, 0, 2, 4, 1, 1, 2, 2, 1, 1))| Analysis | Bound | Z | Probability | Time | Events | AHR | theta | info | info0 |

|---|---|---|---|---|---|---|---|---|---|

| 2 | Upper | 2.96 | 0.0015 | 15.5 | 166.7 | 1 | 0 | 41.7 | 41.7 |

| 3 | Upper | 2.36 | 0.0096 | 21.6 | 250.1 | 1 | 0 | 62.5 | 62.5 |

| 4 | Upper | 2.01 | 0.0250 | 31.2 | 333.4 | 1 | 0 | 83.4 | 83.4 |

| 1 | Lower | -1.64 | 0.0500 | 11.8 | 100.0 | 1 | 0 | 25.0 | 25.0 |

| 2 | Lower | -1.64 | 0.0767 | 15.5 | 166.7 | 1 | 0 | 41.7 | 41.7 |

Confirmation by Simulation

We do a small simulation to approximate the boundary crossing probabilities just shown in the 2-sided asymmetric design design2sa. First, we generate test statistics for each analysis for a number of simulated trials.

fr <- simfix2simPWSurv(failRates = failRates)

nsim <- 200 # Number of trial simulations

simresult <- NULL

N <- ceiling(design2sa$enrollRates %>% summarize(N = sum(rate / 2 * duration))) * 2

K <- max(design2sa$bounds$Analysis)

events <- ceiling(sort(unique(design2sa$bounds$Events)))

for (i in 1:nsim) {

sim <- simPWSurv(

n = as.numeric(N),

enrollRates = design2sa$enrollRates,

failRates = fr$failRates,

dropoutRates = fr$dropoutRates

)

for (k in 1:K) {

Z <- sim %>%

cutDataAtCount(events[k]) %>% # Cut simulation for analysis at targeted events

tensurv(txval = "Experimental") %>%

tenFH(rg = tibble(rho = 0, gamma = 0))

simresult <- rbind(

simresult,

tibble(sim = i, k = k, Z = -Z$Z) # Change sign for Z

)

}

}Now we analyze the individual trials and summarize results. A larger simulation would be required to more accurately assess the asymptotic approximation for boundary crossing probabilities.

bds <- tibble::tibble(

k = sort(unique(design2sa$bounds$Analysis)),

upper = (design2sa$bounds %>% filter(Bound == "Upper"))$Z,

lower = (design2sa$bounds %>% filter(Bound == "Lower"))$Z

)

trialsum <- simresult %>%

full_join(bds, by = "k") %>%

filter(Z < lower | Z >= upper | k == K) %>%

group_by(sim) %>%

slice(1) %>%

ungroup()

trialsum %>% summarize(

nsim = n(),

"Early futility (%)" = 100 * mean(Z < lower),

"Power (%)" = 100 * mean(Z >= upper)

)

#> # A tibble: 1 × 3

#> nsim `Early futility (%)` `Power (%)`

#> <int> <dbl> <dbl>

#> 1 200 1 90Differences From gsDesign

The sample size computation from the gsdmvn package is slightly different than from the gsDesign package for proportional hazards model. We demonstrate this with the bounds for the 1-sided test above under a proportional hazards model with an underlying hazard ratio of 0.7.

design1sPH <- gs_design_nph(

enrollRates = enrollRates,

failRates = failRates %>% mutate(hr = .7),

analysisTimes = 36, # Trial duration

upar = b,

lpar = rep(-Inf, 3), # No futility bound

IF = c(.5, .75, 1)

)

design1sPH$enrollRates %>% kable()| Stratum | duration | rate | N |

|---|---|---|---|

| All | 2 | 12.76886 | 25.53771 |

| All | 2 | 25.53771 | 76.61314 |

| All | 2 | 38.30657 | 153.22628 |

| All | 6 | 51.07543 | 459.67883 |

This results in total sample size:

Now we derive a design with the same targeted properties using the gsDesign package.

x <- gsSurv(

k = 3,

test.type = 1,

alpha = .025,

beta = .1,

timing = c(.5, .75, 1),

sfu = sfLDOF,

lambda = log(2) / medianSurv,

hr = .7,

eta = .001, # Dropout rate

gamma = c(.25, .5, .75, 1),

R = c(2, 2, 2, 6),

minfup = 24,

T = 36

)

gsBoundSummary(x) %>% kable(row.names = FALSE)| Analysis | Value | Efficacy |

|---|---|---|

| IA 1: 50% | Z | 2.9626 |

| N: 456 | p (1-sided) | 0.0015 |

| Events: 168 | ~HR at bound | 0.6328 |

| Month: 17 | P(Cross) if HR=1 | 0.0015 |

| P(Cross) if HR=0.7 | 0.2580 | |

| IA 2: 75% | Z | 2.3590 |

| N: 456 | p (1-sided) | 0.0092 |

| Events: 252 | ~HR at bound | 0.7427 |

| Month: 24 | P(Cross) if HR=1 | 0.0096 |

| P(Cross) if HR=0.7 | 0.6853 | |

| Final | Z | 2.0141 |

| N: 456 | p (1-sided) | 0.0220 |

| Events: 336 | ~HR at bound | 0.8025 |

| Month: 36 | P(Cross) if HR=1 | 0.0250 |

| P(Cross) if HR=0.7 | 0.9000 |

Enrollment rates are:

| Stratum 1 | |

|---|---|

| 0-2 | 12.61705 |

| 2-4 | 25.23410 |

| 4-6 | 37.85115 |

| 6-12 | 50.46820 |